Context Engineering

之前,我们习惯用 "prompt" 来描述给 LLM 的任务指令——比如 "请解释为什么天空是蓝色的",并由此诞生了 Prompt Engineering。近期,大家发现,在复杂的 LLM 应用中,上下文工程 (Context Engineering) 才是更准确的术语。如果说 Prompt Engineering 是教会大家如何给 LLM 准确的下达命令,那 Context Engineering 更像是教会大家如何给 LLM 准备好所有处理任务所需要的环境、工具和资料。

本文是对 LangChain 官方博客 Context Engineering for Agents 的中文解读,旨在帮助中文开发者更好地理解上下文工程的核心概念和实现。引用部分为文章原文段落,紧接是对该段落的中文解释。

TL;DR

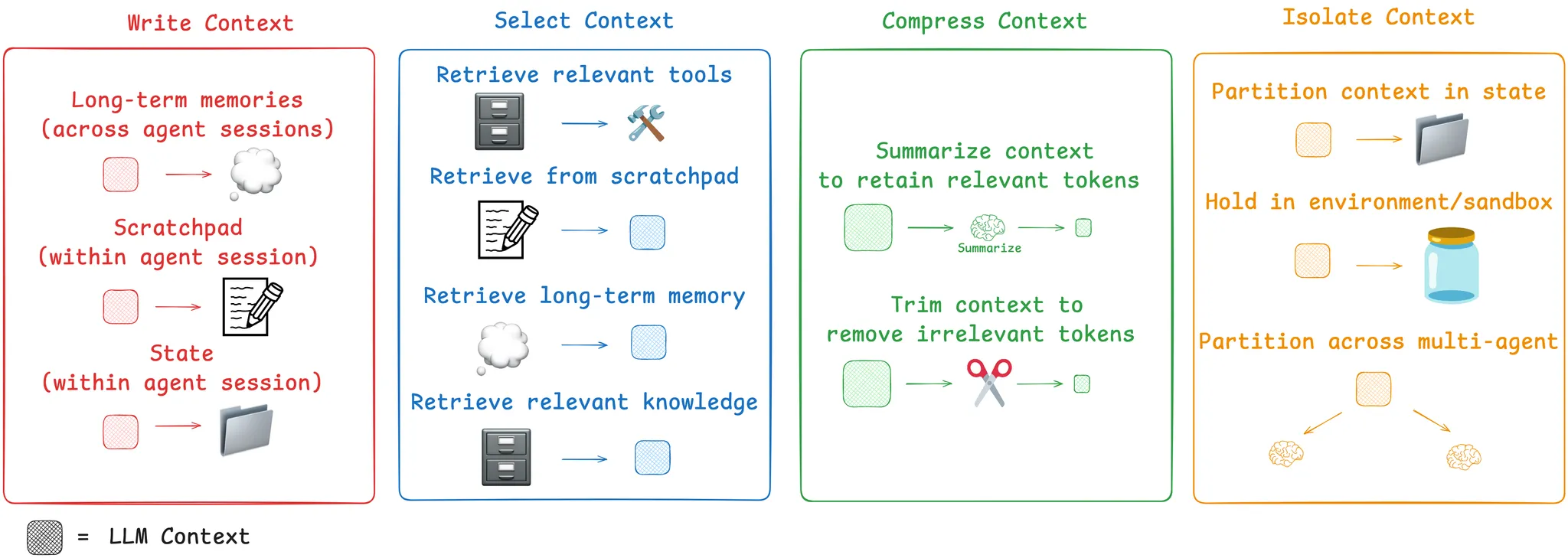

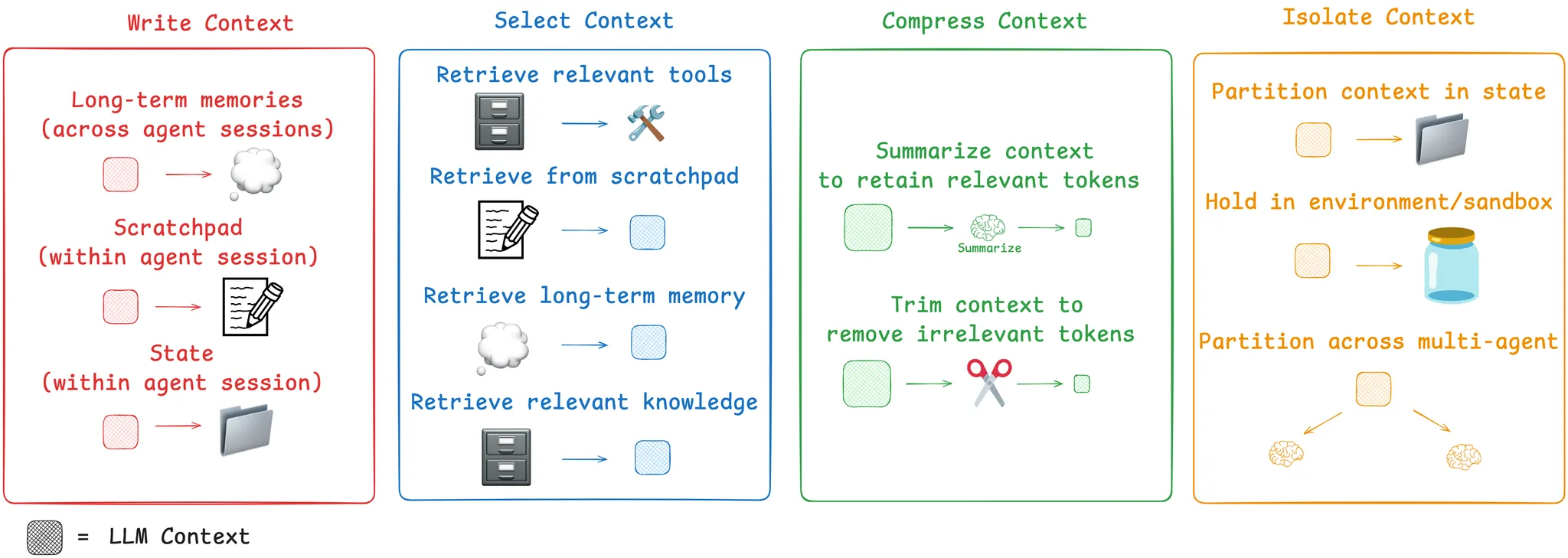

Agents need context to perform tasks. Context engineering is the art and science of filling the context window with just the right information at each step of an agent's trajectory. In this post, we break down some common strategies — write, select, compress, and isolate — for context engineering by reviewing various popular agents and papers. We then explain how LangGraph is designed to support them!

智能体 (Agents) 在执行任务时需要上下文信息。上下文工程就是在智能体执行的每个步骤中,为其上下文窗口提供恰到好处的信息。这篇文章介绍了四种常见的上下文工程策略:写入 (write)、选择 (select)、压缩 (compress) 和隔离 (isolate)。通过分析当前流行的智能体系统和研究论文,文章展示了这些策略的实际应用,并说明了 LangGraph 框架如何支持这些策略的实现。

Context Engineering (上下文工程)

As Andrej Karpathy puts it, LLMs are like a new kind of operating system. The LLM is like the CPU and its context window is like the RAM, serving as the model's working memory. Just like RAM, the LLM context window has limited capacity to handle various sources of context. And just as an operating system curates what fits into a CPU's RAM, we can think about "context engineering" playing a similar role.

Andrej Karpathy 将大语言模型比作一种新型操作系统。在这个类比中,LLM 就像 CPU,而上下文窗口就像 RAM,充当模型的工作内存。正如 RAM 的容量有限,LLM 的上下文窗口也只能处理有限的信息源。就像操作系统需要管理 RAM 中的内容一样,上下文工程承担着类似的角色,决定什么信息应该被放入 LLM 的上下文窗口中。

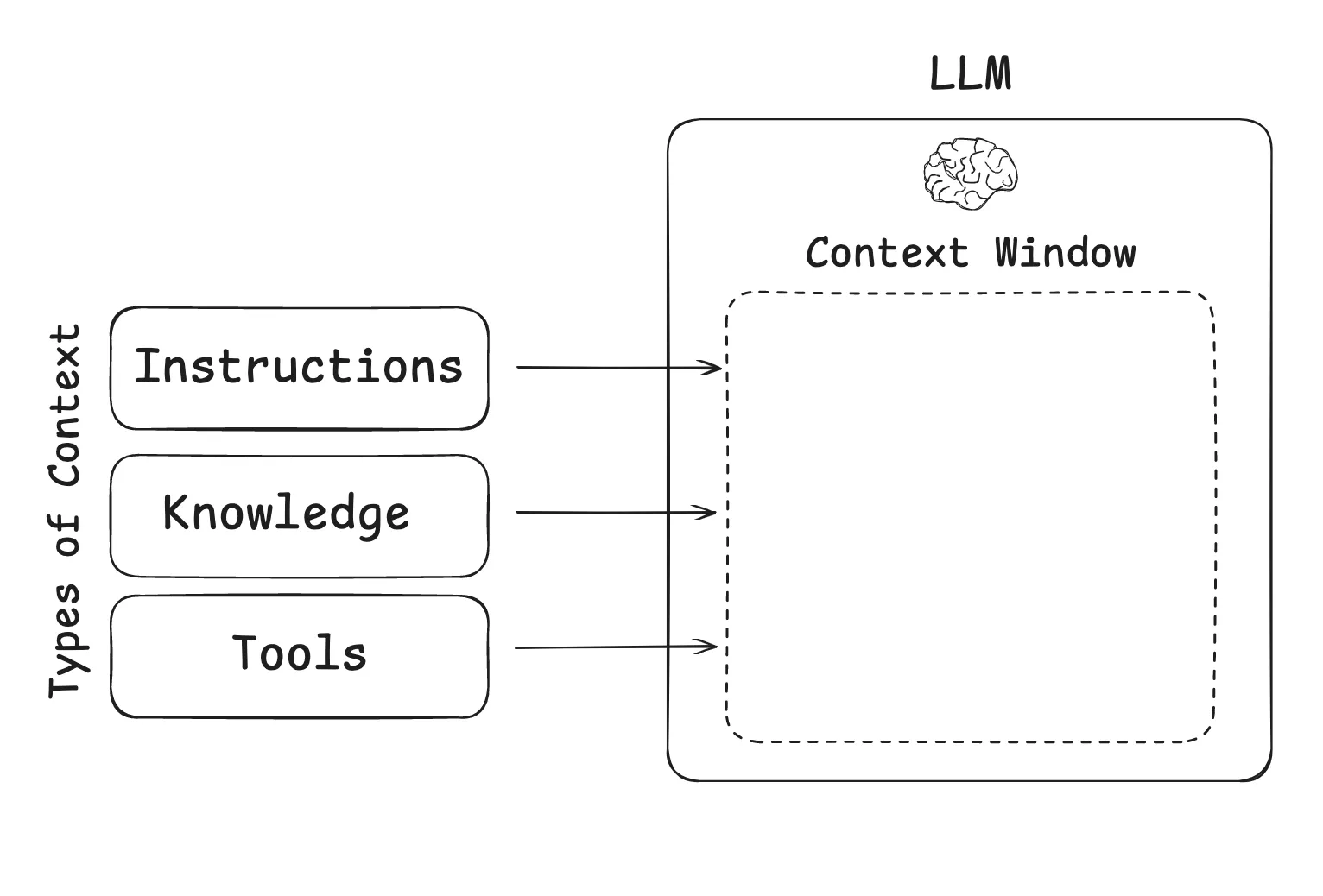

What are the types of context that we need to manage when building LLM applications? Context engineering as an umbrella that applies across a few different context types:

- Instructions – prompts, memories, few‑shot examples, tool descriptions, etc

- Knowledge – facts, memories, etc

- Tools – feedback from tool calls

在构建 LLM 应用时,我们需要管理三种主要的上下文类型:

- 指令类:包括提示词、记忆、少样本示例、工具描述等

- 知识类:包括事实信息、记忆等

- 工具类:工具调用的反馈信息

Context Engineering for Agents (智能体的上下文工程)

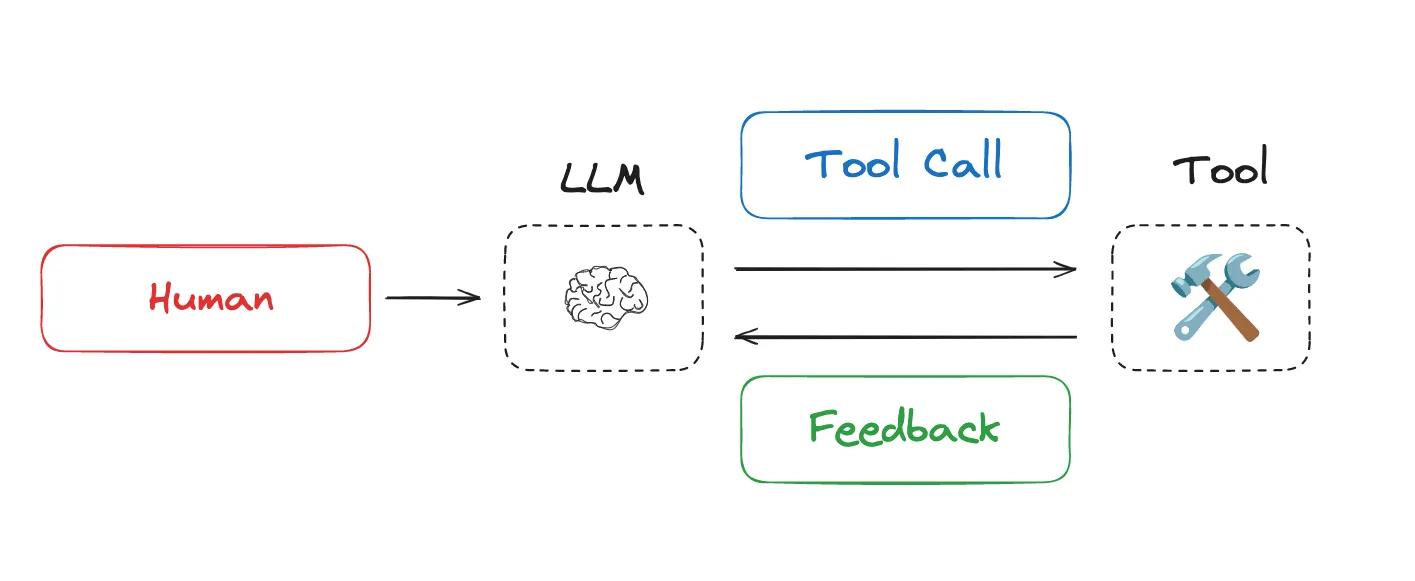

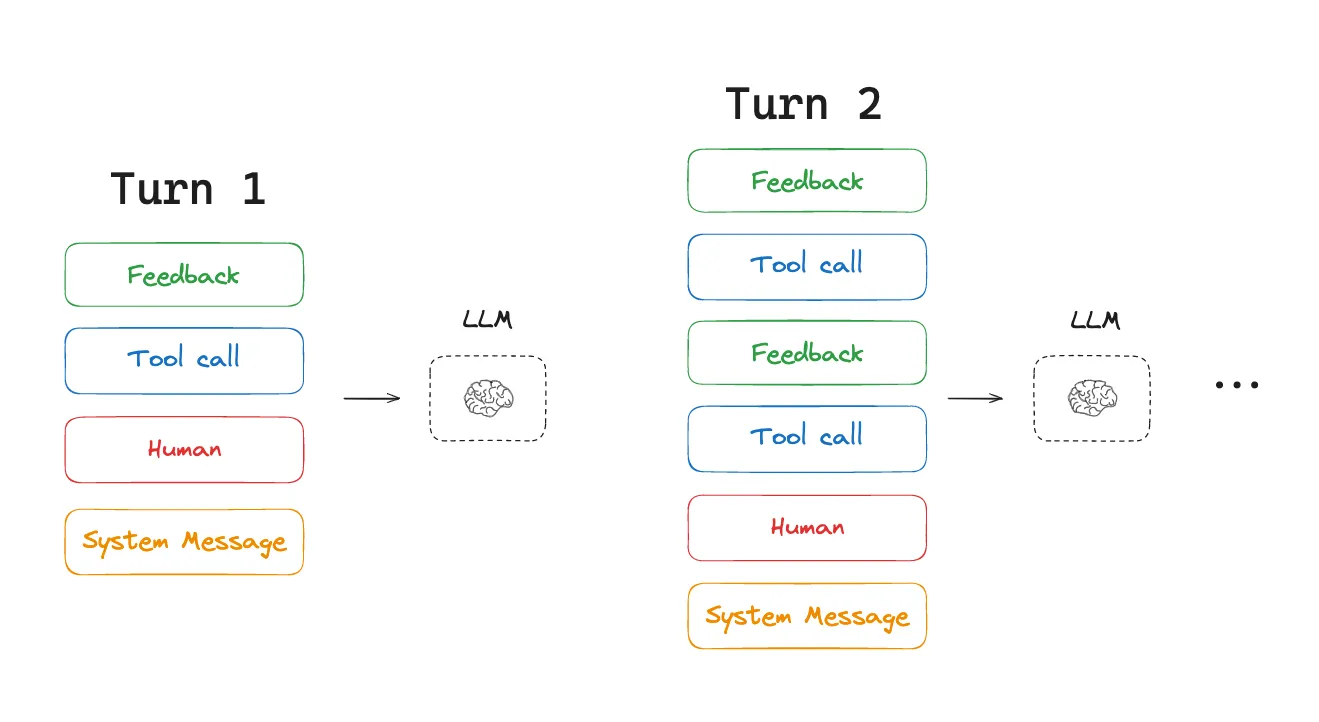

This year, interest in agents has grown tremendously as LLMs get better at reasoning and tool calling. Agents interleave LLM invocations and tool calls, often for long-running tasks. Agents interleave LLM calls and tool calls, using tool feedback to decide the next step.

随着 LLM 在推理和工具调用能力上的提升,智能体系统在今年受到了极大关注。智能体通过交替进行 LLM 调用和工具调用来完成任务,特别是在处理长时间运行的任务时。智能体会利用工具调用的反馈来决定下一步的行动。

However, long-running tasks and accumulating feedback from tool calls mean that agents often utilize a large number of tokens. This can cause numerous problems: it can exceed the size of the context window, balloon cost / latency, or degrade agent performance. Drew Breunig nicely outlined a number of specific ways that longer context can cause perform problems, including:

- Context Poisoning: When a hallucination makes it into the context

- Context Distraction: When the context overwhelms the training

- Context Confusion: When superfluous context influences the response

- Context Clash: When parts of the context disagree

长时间运行的任务和不断累积的工具反馈会导致智能体消耗大量的 token。这会带来多个问题:可能超出上下文窗口的限制、增加成本和延迟,或者降低智能体的性能。Drew Breunig 总结了长上下文可能导致的几种性能问题:

- 上下文中毒:错误信息进入上下文

- 上下文干扰:上下文信息压倒了模型的训练知识

- 上下文混淆:无关的上下文影响了输出

- 上下文冲突:上下文的不同部分相互矛盾

With this in mind, Cognition called out the importance of context engineering: "Context engineering" … is effectively the #1 job of engineers building AI agents.

Anthropic also laid it out clearly: Agents often engage in conversations spanning hundreds of turns, requiring careful context management strategies.

基于这些挑战,Cognition 公司指出上下文工程是构建 AI 智能体的首要任务。Anthropic 也明确表示,智能体经常需要进行数百轮的对话,这需要精心设计的上下文管理策略。

So, how are people tackling this challenge today? We group common strategies for agent context engineering into four buckets — write, select, compress, and isolate — and give examples of each from review of some popular agent products and papers. We then explain how LangGraph is designed to support them!

目前业界如何应对这些挑战?文章将常见的上下文工程策略归纳为四类:写入、选择、压缩和隔离。下面将通过实际案例详细介绍每种策略。

Write Context (写入上下文)

Writing context means saving it outside the context window to help an agent perform a task.

写入上下文是指将信息保存在上下文窗口之外,以帮助智能体完成任务。

Scratchpads (草稿本)

When humans solve tasks, we take notes and remember things for future, related tasks. Agents are also gaining these capabilities! Note-taking via a "scratchpad" is one approach to persist information while an agent is performing a task. The idea is to save information outside of the context window so that it's available to the agent. Anthropic's multi-agent researcher illustrates a clear example of this:

The LeadResearcher begins by thinking through the approach and saving its plan to Memory to persist the context, since if the context window exceeds 200,000 tokens it will be truncated and it is important to retain the plan.

就像人类解决问题时会记笔记一样,智能体也在获得这种能力。Scratchpad 是一种在任务执行过程中持久化信息的方法。核心思想是将信息保存在上下文窗口之外,使智能体在需要时可以访问。Anthropic 的多智能体研究系统中有一个很好的例子:主研究员智能体会先思考解决方案,并将计划保存到内存中,因为当上下文窗口超过 20 万 token 时会被截断,一开始的计划就丢失了。

Scratchpads can be implemented in a few different ways. They can be a tool call that simply writes to a file. They can also be a field in a runtime state object that persists during the session. In either case, scratchpads let agents save useful information to help them accomplish a task.

Scratchpad 可以通过多种方式实现:可以是一个简单写入文件的工具调用,也可以是运行时状态对象中的一个字段,在会话期间持续存在。无论采用哪种方式,scratchpad 都能让智能体保存有用的信息来帮助完成任务。

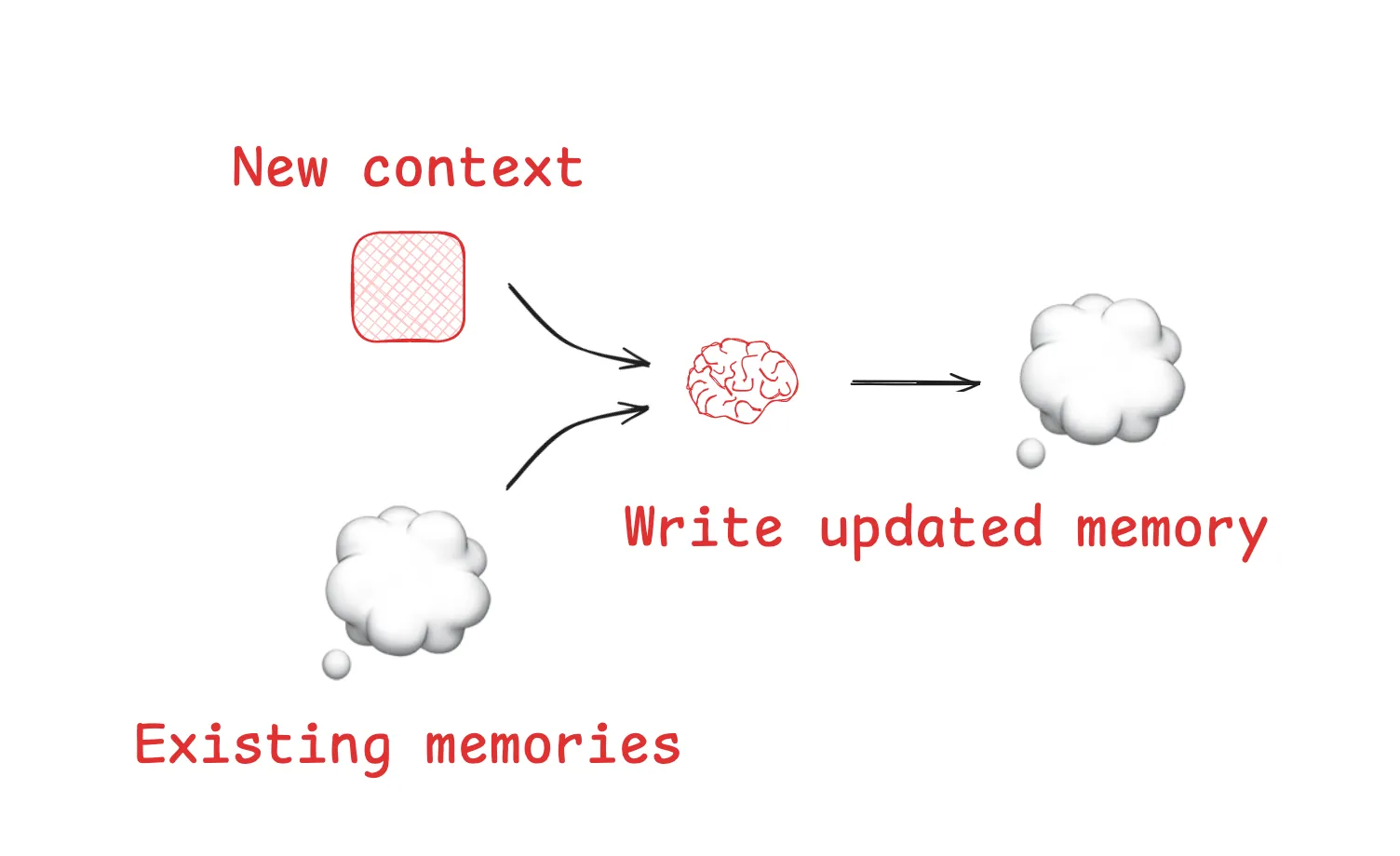

Memories (记忆)

Scratchpads help agents solve a task within a given session (or thread), but sometimes agents benefit from remembering things across many sessions! Reflexion introduced the idea of reflection following each agent turn and re-using these self-generated memories. Generative Agents created memories synthesized periodically from collections of past agent feedback.

Scratchpad 帮助智能体在单个会话中解决任务,但有时智能体需要跨会话记住信息。Reflexion 论文引入了反思机制,在每个智能体回合后进行反思并重用这些自生成的记忆。Generative Agents 则定期从过去的智能体反馈中合成记忆。

These concepts made their way into popular products like ChatGPT, Cursor, and Windsurf, which all have mechanisms to auto-generate long-term memories that can persist across sessions based on user-agent interactions.

这些概念已经被应用到 ChatGPT、Cursor 和 Windsurf 等流行产品中,它们都具有基于用户与智能体交互自动生成长期记忆的机制,这些记忆可以跨会话持续存在。

Select Context (选择上下文)

Selecting context means pulling it into the context window to help an agent perform a task.

选择上下文是指将相关信息拉入上下文窗口来帮助智能体执行任务。

Scratchpad

The mechanism for selecting context from a scratchpad depends upon how the scratchpad is implemented. If it's a tool, then an agent can simply read it by making a tool call. If it's part of the agent's runtime state, then the developer can choose what parts of state to expose to an agent each step. This provides a fine-grained level of control for exposing scratchpad context to the LLM at later turns.

从 scratchpad 选择上下文的机制取决于其实现方式。如果是工具形式,智能体可以通过工具调用来读取。如果是运行时状态的一部分,开发者可以选择在每个步骤中向智能体暴露状态的哪些部分。这提供了细粒度的控制,可以在后续回合中精确地向 LLM 暴露 scratchpad 上下文。

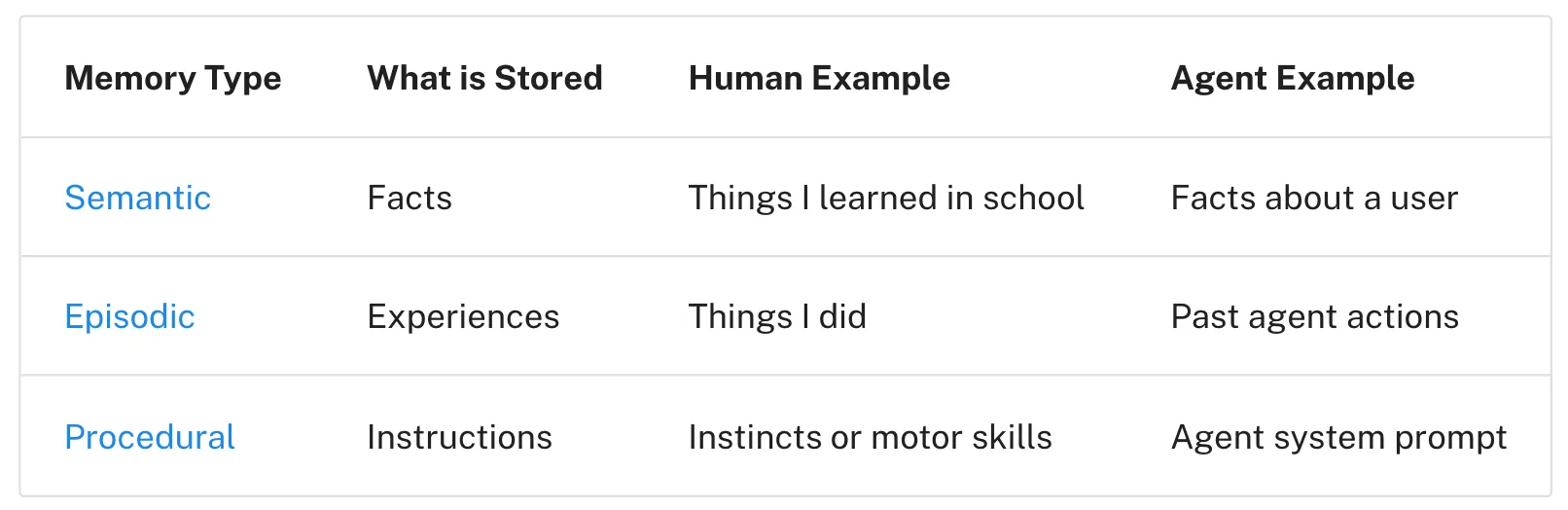

Memories

If agents have the ability to save memories, they also need the ability to select memories relevant to the task they are performing. This can be useful for a few reasons. Agents might select few-shot examples (episodic memories) for examples of desired behavior, instructions (procedural memories) to steer behavior, or facts (semantic memories) for task-relevant context.

如果智能体能够保存记忆,它们也需要能够选择与当前任务相关的记忆。这有几个用途:智能体可能会选择少样本示例 (情节记忆) 作为期望行为的例子、选择指令 (程序记忆) 来引导行为,或选择事实 (语义记忆) 作为任务相关的上下文。

One challenge is ensuring that relevant memories are selected. Some popular agents simply use a narrow set of files that are always pulled into context. For example, many code agent use specific files to save instructions ("procedural" memories) or, in some cases, examples ("episodic" memories). Claude Code uses CLAUDE.md. Cursor and Windsurf use rules files.

如何选择相关记忆是一个挑战。一些流行的智能体简单地使用固定的文件存储记忆,这些文件总是被拉入上下文。例如,许多 coding agent 使用特定文件来保存指令 (程序记忆) 或示例 (情节记忆)。Claude Code 使用 CLAUDE.md 文件,Cursor 和 Windsurf 使用规则文件。

But, if an agent is storing a larger collection of facts and / or relationships (e.g., semantic memories), selection is harder. ChatGPT is a good example of a popular product that stores and selects from a large collection of user-specific memories.

Embeddings and / or knowledge graphs for memory indexing are commonly used to assist with selection. Still, memory selection is challenging. At the AIEngineer World's Fair, Simon Willison shared an example of selection gone wrong: ChatGPT fetched his location from memories and unexpectedly injected it into a requested image. This type of unexpected or undesired memory retrieval can make some users feel like the context window "no longer belongs to them"!

但如果智能体存储了大量的事实和关系 (如语义记忆),选择就变得更加困难。ChatGPT 是一个很好的例子,它存储并从大量用户特定记忆中进行选择。Embeddings 和知识图谱常用于辅助记忆索引和选择。然而,记忆选择仍然充满挑战。Simon Willison 在 AIEngineer 世界博览会上分享了一个选择出错的例子:ChatGPT 从记忆中获取了他的位置信息,并意外地将其注入到请求的图像中。这种意外或不期望的记忆检索会让用户感觉上下文窗口 "不再属于他们"。

Tools

Agents use tools, but can become overloaded if they are provided with too many. This is often because the tool descriptions overlap, causing model confusion about which tool to use. One approach is to apply RAG (retrieval augmented generation) to tool descriptions in order to fetch only the most relevant tools for a task. Some recent papers have shown that this improve tool selection accuracy by 3-fold.

智能体使用工具,但如果提供太多工具会导致过载。这通常是因为工具描述存在重叠,导致模型在选择工具时产生混淆。一种方法是对工具描述应用 RAG (Retrieval Augmented Generation) ,只获取与任务最相关的工具。最近的研究表明,这种方法可以将工具选择的准确性提高 3 倍。

Knowledge

RAG is a rich topic and it can be a central context engineering challenge. Code agents are some of the best examples of RAG in large-scale production. Varun from Windsurf captures some of these challenges well:

Indexing code ≠ context retrieval … [We are doing indexing & embedding search … [with] AST parsing code and chunking along semantically meaningful boundaries … embedding search becomes unreliable as a retrieval heuristic as the size of the codebase grows … we must rely on a combination of techniques like grep/file search, knowledge graph based retrieval, and … a re-ranking step where [context] is ranked in order of relevance.

RAG 是一个内容很多的主题,也是上下文工程的核心挑战。Coding Agent 是大规模生产环境中 RAG 应用的最佳例子。Windsurf 的 Varun 很好地总结了这些挑战:代码索引不等于上下文检索。他们使用 AST 解析代码并沿着语义边界进行分块,但随着代码库规模的增长,embedding 搜索作为检索启发式方法变得不可靠。必须依赖多种技术的组合,如 grep/文件搜索、基于知识图谱的检索,以及按相关性排序的重排序步骤。

Compressing Context (压缩上下文)

Compressing context involves retaining only the tokens required to perform a task.

压缩上下文涉及只保留执行任务所需的 token。

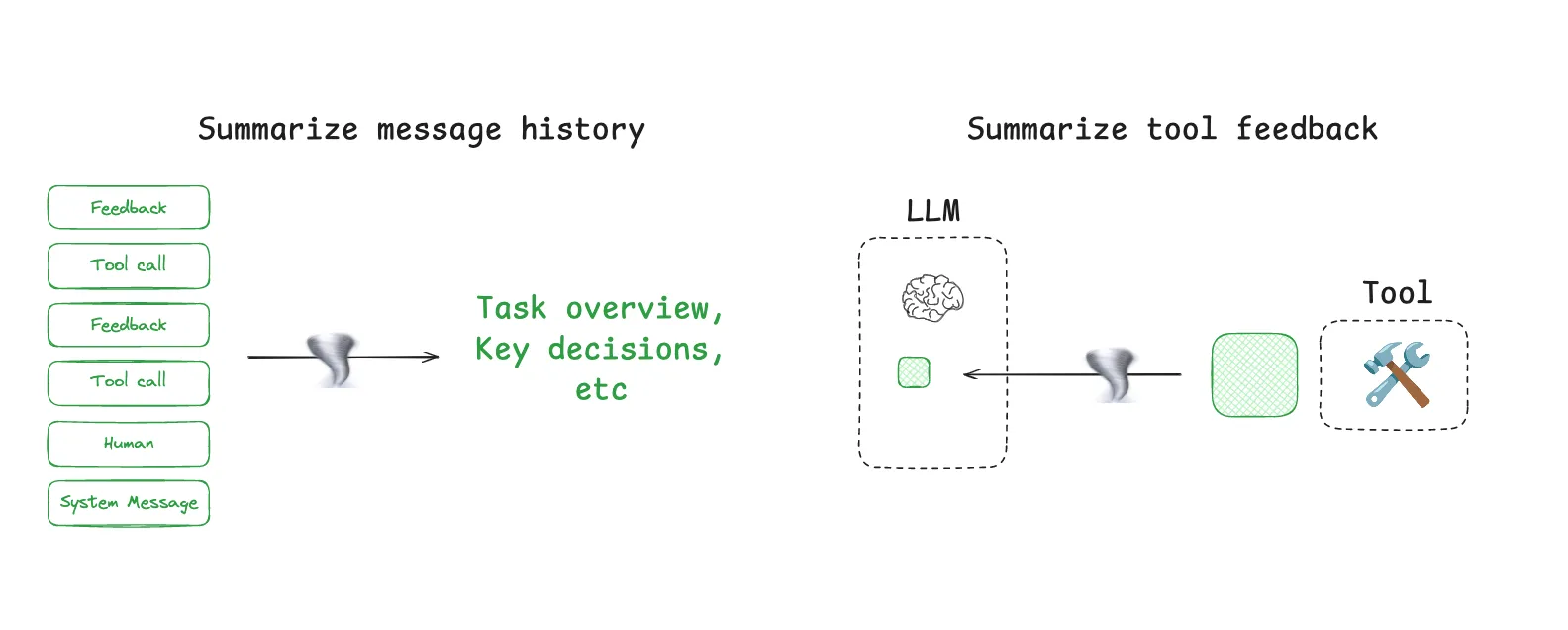

Context Summarization (上下文总结)

Agent interactions can span hundreds of turns and use token-heavy tool calls. Summarization is one common way to manage these challenges. If you've used Claude Code, you've seen this in action. Claude Code runs "auto-compact" after you exceed 95% of the context window and it will summarize the full trajectory of user-agent interactions. This type of compression across an agent trajectory can use various strategies such as recursive or hierarchical summarization.

智能体交互可能跨越数百轮对话并使用大量 token 的工具调用。总结是管理这些挑战的常见方法。如果你使用过 Claude Code,就会看到这个功能的实际应用。当超过上下文窗口的 95% 时,Claude Code 会运行 "自动压缩",总结用户与智能体交互的完整轨迹。这种跨智能体轨迹的压缩可以使用递归或分层总结等多种策略。

It can also be useful to add summarization at specific points in an agent's design. For example, it can be used to post-process certain tool calls (e.g., token-heavy search tools). As a second example, Cognition mentioned summarization at agent-agent boundaries to reduce tokens during knowledge hand-off. Summarization can be a challenge if specific events or decisions need to be captured. Cognition uses a fine-tuned model for this, which underscores how much work can go into this step.

在智能体设计的特定点添加总结也很有用。例如,可以用来后处理某些工具调用 (如消耗大量 token 的搜索工具)。Cognition 提到在智能体之间的边界进行总结,以减少知识传递时的 token 使用。如果需要捕获特定事件或决策,总结可能会成为挑战。Cognition 为此使用了微调模型,这突显了这一步骤所需的工作量。

Context Trimming (上下文修剪)

Whereas summarization typically uses an LLM to distill the most relevant pieces of context, trimming can often filter or, as Drew Breunig points out, "prune" context. This can use hard-coded heuristics like removing older messages from a list. Drew also mentions Provence, a trained context pruner for Question-Answering.

与使用 LLM 提炼最相关上下文片段的总结不同,修剪通常通过过滤或 "剪枝" 来处理上下文。这可以使用硬编码的启发式方法,如从列表中删除较旧的消息。Drew 还提到了 Provence,这是一个用于问答任务的训练过的上下文修剪器。

Isolating Context (隔离上下文)

Isolating context involves splitting it up to help an agent perform a task.

隔离上下文涉及将其分割以帮助智能体执行任务。

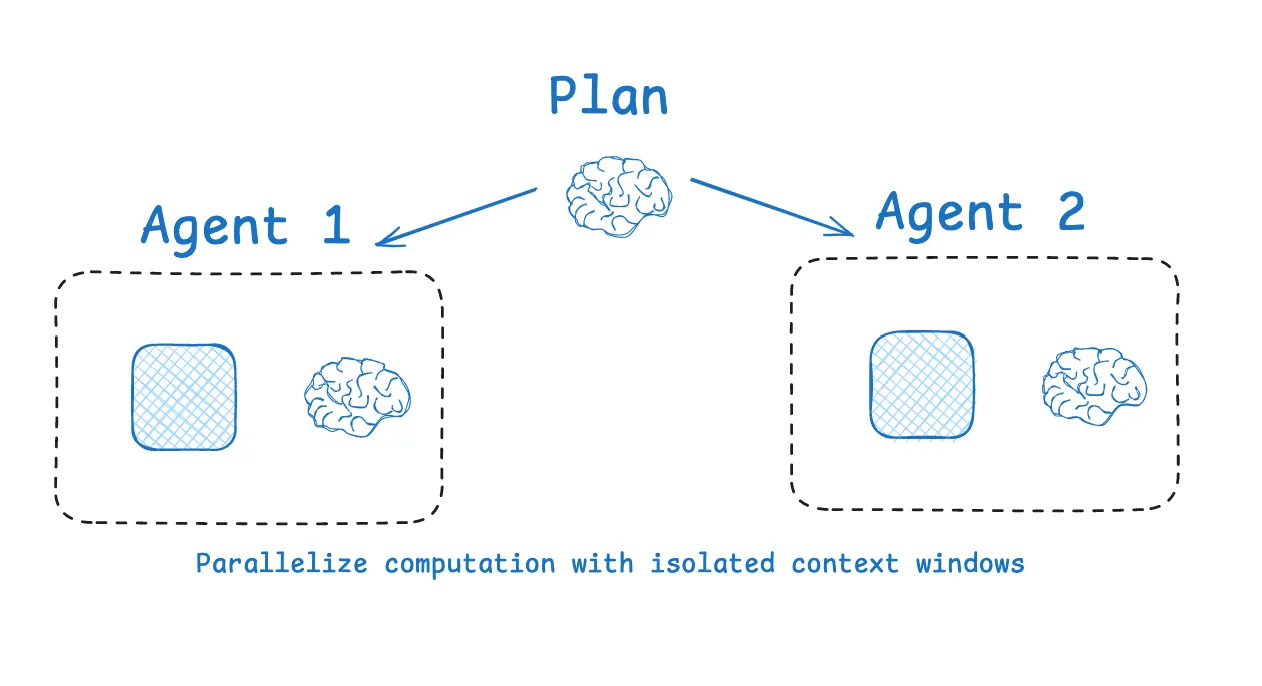

Multi-agent (多智能体)

One of the most popular ways to isolate context is to split it across sub-agents. A motivation for the OpenAI Swarm library was separation of concerns, where a team of agents can handle specific sub-tasks. Each agent has a specific set of tools, instructions, and its own context window.

隔离上下文最流行的方法之一是将其分配给多个子智能体。OpenAI Swarm 库的动机之一就是关注点分离,让智能体团队处理特定的子任务。每个智能体都有特定的工具集、指令和自己的上下文窗口。

Anthropic's multi-agent researcher makes a case for this: many agents with isolated contexts outperformed single-agent, largely because each subagent context window can be allocated to a more narrow sub-task. As the blog said:

[Subagents operate] in parallel with their own context windows, exploring different aspects of the question simultaneously.

Of course, the challenges with multi-agent include token use (e.g., up to 15× more tokens than chat as reported by Anthropic), the need for careful prompt engineering to plan sub-agent work, and coordination of sub-agents.

Anthropic 的多智能体研究系统证明了这一点:具有隔离上下文的多个智能体优于单智能体,主要是因为每个子智能体的上下文窗口可以专注于更小的子任务。子智能体并行运行,各自使用自己的上下文窗口,同时探索问题的不同方面。当然,多智能体架构的挑战包括 token 使用量 (Anthropic 报告称可能是聊天的 15 倍)、需要精心设计提示词来规划子智能体工作,以及子智能体之间的协调。

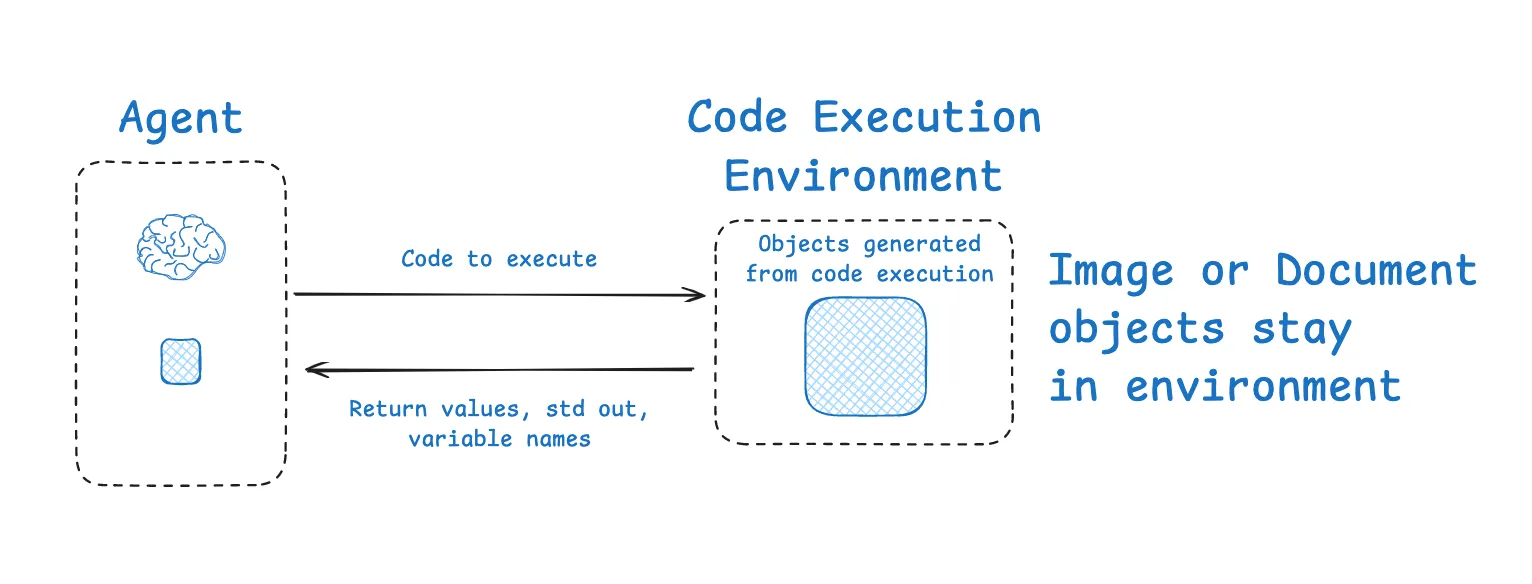

*Context Isolation with Environments (使用环境进行上下文隔离)

HuggingFace's deep researcher shows another interesting example of context isolation. Most agents use tool calling APIs, which return JSON objects (tool arguments) that can be passed to tools (e.g., a search API) to get tool feedback (e.g., search results). HuggingFace uses a CodeAgent, which outputs that contains the desired tool calls. The code then runs in a sandbox. Selected context (e.g., return values) from the tool calls is then passed back to the LLM.

HuggingFace 的 deep researcher 展示了另一个有趣的上下文隔离例子。大多数智能体带有 Tool Calling 功能的 LLM API,返回 JSON 对象作为工具参数。HuggingFace 使用 CodeAgent,输出包含所需工具调用的代码。代码在沙箱中运行,只有选定的上下文 (如返回值) 被传回 LLM。

This allows context to be isolated from the LLM in the environment. Hugging Face noted that this is a great way to isolate token-heavy objects in particular:

[Code Agents allow for] a better handling of state … Need to store this image / audio / other for later use? No problem, just assign it as a variable[in your state and you [use it later]].

这允许在环境中将上下文与 LLM 隔离。HuggingFace 指出,这特别适合隔离消耗大量 token 的对象:coding agent 可以更好地处理状态。需要存储图像、音频或其他内容供以后使用?没问题,只需将其分配为变量即可。

State (状态)

It's worth calling out that an agent's runtime state object can also be a great way to isolate context. This can serve the same purpose as sandboxing. A state object can be designed with a schema that has fields that context can be written to. One field of the schema (e.g., messages) can be exposed to the LLM at each turn of the agent, but the schema can isolate information in other fields for more selective use.

值得注意的是,智能体的运行时状态对象也是隔离上下文的好方法。这可以达到与沙箱相同的目的。状态对象可以设计成包含多个字段的模式,上下文可以写入这些字段。模式的某个字段 (如 messages) 可以在智能体的每个回合中暴露给 LLM,而其他字段的信息可以被隔离,以便更有选择性地使用。

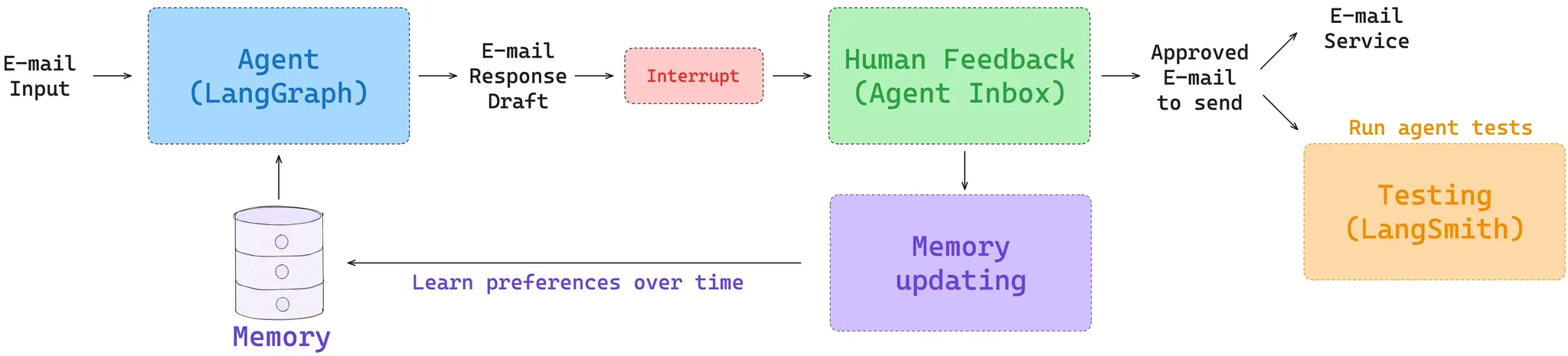

Context Engineering with LangSmith / LangGraph

So, how can you apply these ideas? Before you start, there are two foundational pieces that are helpful. First, ensure that you have a way to look at your data and track token-usage across your agent. This helps inform where best to apply effort context engineering. LangSmith is well-suited for agent tracing / observability, and offers a great way to do this. Second, be sure you have a simple way to test whether context engineering hurts or improve agent performance. LangSmith enables agent evaluation to test the impact of any context engineering effort.

如何实施?开始之前,有两个基础工作很重要。首先,确保你有方法查看数据并跟踪智能体的 token 使用情况。这有助于确定在哪里应用上下文工程最有效。LangSmith 非常适合智能体追踪和观测。其次,确保你有简单的方法来测试上下文工程是否改善了智能体性能。LangSmith 提供智能体评估功能来测试任何上下文工程工作的影响。

Write context

LangGraph was designed with both thread-scoped (short-term) and long-term memory. Short-term memory uses checkpointing to persist agent state across all steps of an agent. This is extremely useful as a "scratchpad", allowing you to write information to state and fetch it at any step in your agent trajectory.

LangGraph's long-term memory lets you to persist context across many sessions with your agent. It is flexible, allowing you to save small sets of files (e.g., a user profile or rules) or larger collections of memories. In addition, LangMem provides a broad set of useful abstractions to aid with LangGraph memory management.

LangGraph 设计了短期和长期记忆。短期记忆使用检查点来在智能体的所有步骤中持久化智能体状态。这作为 "scratchpad" 非常有用,允许你将信息写入状态并在智能体轨迹的任何步骤中获取。LangGraph 的长期记忆让你能够跨多个会话持久化上下文。它很灵活,可以保存小型文件集 (如用户配置文件或规则) 或更大的记忆集合。此外,LangMem 提供了一套有用的抽象方法来辅助记忆管理。

Select context

Within each node (step) of a LangGraph agent, you can fetch state. This give you fine-grained control over what context you present to the LLM at each agent step.

In addition, LangGraph's long-term memory is accessible within each node and supports various types of retrieval (e.g., fetching files as well as embedding-based retrieval on a memory collection). For an overview of long-term memory, see our Deeplearning.ai course. And for an entry point to memory applied to a specific agent, see our Ambient Agents course. This shows how to use LangGraph memory in a long-running agent that can manage your email and learn from your feedback.

在 LangGraph 智能体的每个节点 (步骤) 中,你可以获取状态。这让你对上下文有细粒度的控制。此外,LangGraph 的长期记忆在每个节点中都可访问,支持各种类型的检索 (如获取文件以及基于 embedding 的记忆检索)。

For tool selection, the LangGraph Bigtool library is a great way to apply semantic search over tool descriptions. This helps select the most relevant tools for a task when working with a large collection of tools. Finally, we have several tutorials and videos that show how to use various types of RAG with LangGraph.

对于工具选择,LangGraph Bigtool 库是对工具描述应用语义搜索的好方法。当处理大量工具集合时,这有助于选择与任务最相关的工具。

Compressing context

Because LangGraph is a low-level orchestration framework, you lay out your agent as a set of nodes, define the logic within each one, and define an state object that is passed between them. This control offers several ways to compress context.

One common approach is to use a message list as your agent state and summarize or trim it periodically using a few built-in utilities. However, you can also add logic to post-process tool calls or work phases of your agent in a few different ways. You can add summarization nodes at specific points or also add summarization logic to your tool calling node in order to compress the output of specific tool calls.

LangGraph 作为 low-level 编排框架,可以将智能体组织为多个节点(node)的形式,每个节点定义特定逻辑并通过 State 对象传递信息。

这种架构提供了多种上下文压缩方法:

- 消息(Message)总结:使用消息列表作为 state,定期总结或修剪历史记录

- 节点级压缩:在特定节点添加总结逻辑

- 工具输出处理:对工具调用结果进行后处理和压缩

Isolating context

LangGraph is designed around a state object, allowing you to specify a state schema and access state at each agent step. For example, you can store context from tool calls in certain fields in state, isolating them from the LLM until that context is required. In addition to state, LangGraph supports use of sandboxes for context isolation. See this repo for an example LangGraph agent that uses an E2B sandbox for tool calls. See this video for an example of sandboxing using Pyodide where state can be persisted. LangGraph also has a lot of support for building multi-agent architecture, such as the supervisor and swarm libraries. You can see these videos for more detail on using multi-agent with LangGraph.

LangGraph 围绕状态(State)对象设计,允许你指定状态 schema 并在每个步骤访问状态。例如,你可以将工具调用的上下文存储在状态的某些字段中,将它们与 LLM 隔离,直到需要该上下文。除了状态,LangGraph 还支持使用沙箱进行上下文隔离。LangGraph 还为构建多智能体架构提供了大量支持,例如 supervisor 和 swarm 库。

Conclusion

Context engineering is becoming a craft that agents builders should aim to master. Here, we covered a few common patterns seen across many popular agents today:

- Writing context - saving it outside the context window to help an agent perform a task.

- Selecting context - pulling it into the context window to help an agent perform a task.

- Compressing context - retaining only the tokens required to perform a task.

- Isolating context - splitting it up to help an agent perform a task.

LangGraph makes it easy to implement each of them and LangSmith provides an easy way to test your agent and track context usage. Together, LangGraph and LangGraph enable a virtuous feedback loop for identifying the best opportunity to apply context engineering, implementing it, testing it, and repeating.

上下文工程正在成为构建智能体必须掌握的一项技术,文章介绍了当今流行智能体系统中常见的四种模式:

- 写入上下文 - 将信息保存在上下文窗口之外,以帮助智能体执行任务

- 选择上下文 - 将相关信息拉入上下文窗口来帮助智能体执行任务

- 压缩上下文 - 只保留执行任务所需的 token

- 隔离上下文 - 将上下文分割以帮助智能体执行任务